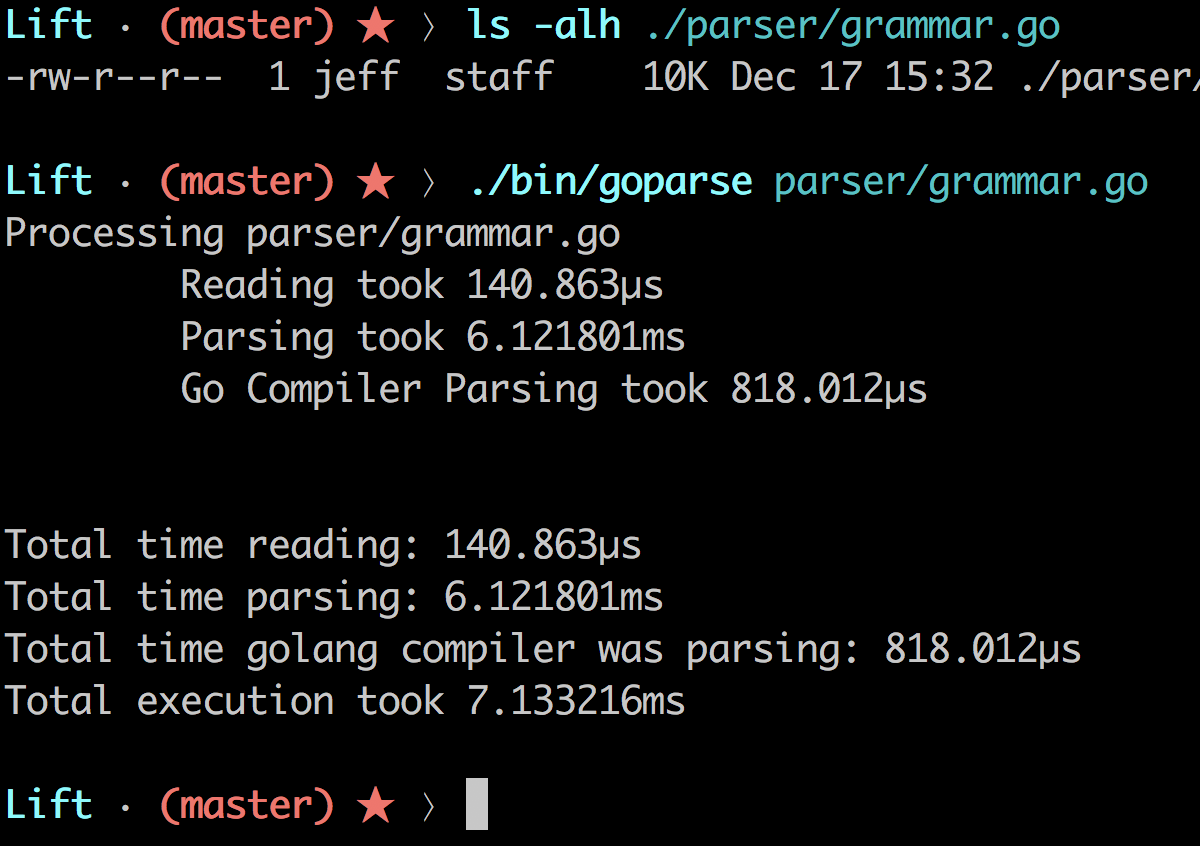

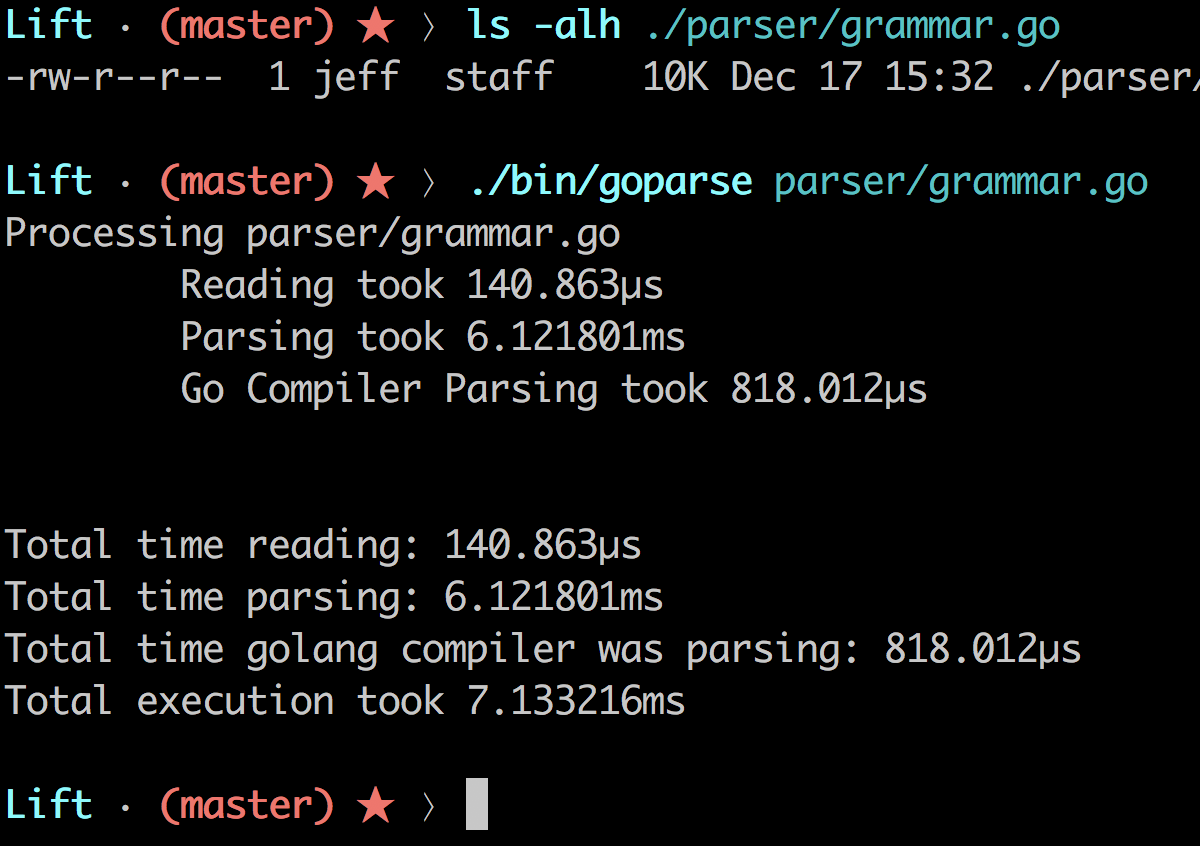

A parser combinator gives a lot of flexibility if you need to parse many languages, usually at the cost of performance. Although sacrificing purity can get you an order of magnitude away from a hand-written parser. The most notable parser in Go is actually it’s own compiler, which is written in go itself. So writing a competing implementation is a good way to compare against hand-optimized implementations on a fair playing field since my parser is in go.

It’s roughly 8 times slower. My parser reads around 1.6MB/s (Go’s is blazing away at 12 MB/s).

Go, the language, is actually a pretty good test of a parser combinator:

- It’s a relatively small grammar to write to parse go code.

- It’s grammar doesn’t have ambiguities like C++.

- It has automatic semicolon insertion which requires an unusual step for the lexing phase.

- It has basic inferred typing (which this parser currently does not do).

Obviously the resulting AST is vastly different between both:

- Go’s parser emits structs that conform directly to the struct of Go code (eg - having structs with field names that directly map to the language)

- Go’s parser assumes all file content is static, which allows for some performance optimizations that I don’t want (I want some IDE-like interactivity).

- My parser emits a general AST that require priori knowledge to walk it (since it’s just a list of nodes). For example, there’s a lot of unnecessary curly braces littered in my AST. It’s fixable with annotations in the parser combinator to exclude generation of those tokens, but hasn’t been implemented yet.

- My parser can be repurposed to another language since it’s a parser combinator.

- Go’s parser does not need to assume infinite lookahead. My parser currently assumes all tokens are lex-ed before parsing occurs. Go’s just-time lexes the next token for parsing.

- Auto-semicolon insertion can be implied in Go’s compiler. So far my compiler needs a hook while lexing to insert implied semicolons.

Some other optimizations probably should be a done at some point:

- Use the Go’s technique of using one int instead of two ints to store token positions. It requires assuming all the files being parsed as one contiguous buffer. The gain in parsing may outweigh the cost to set up a group of files for parsing when an edit is made to a file.

- Have my parser not emit unnecessary tokens (eg - emitting open and close parentheses).

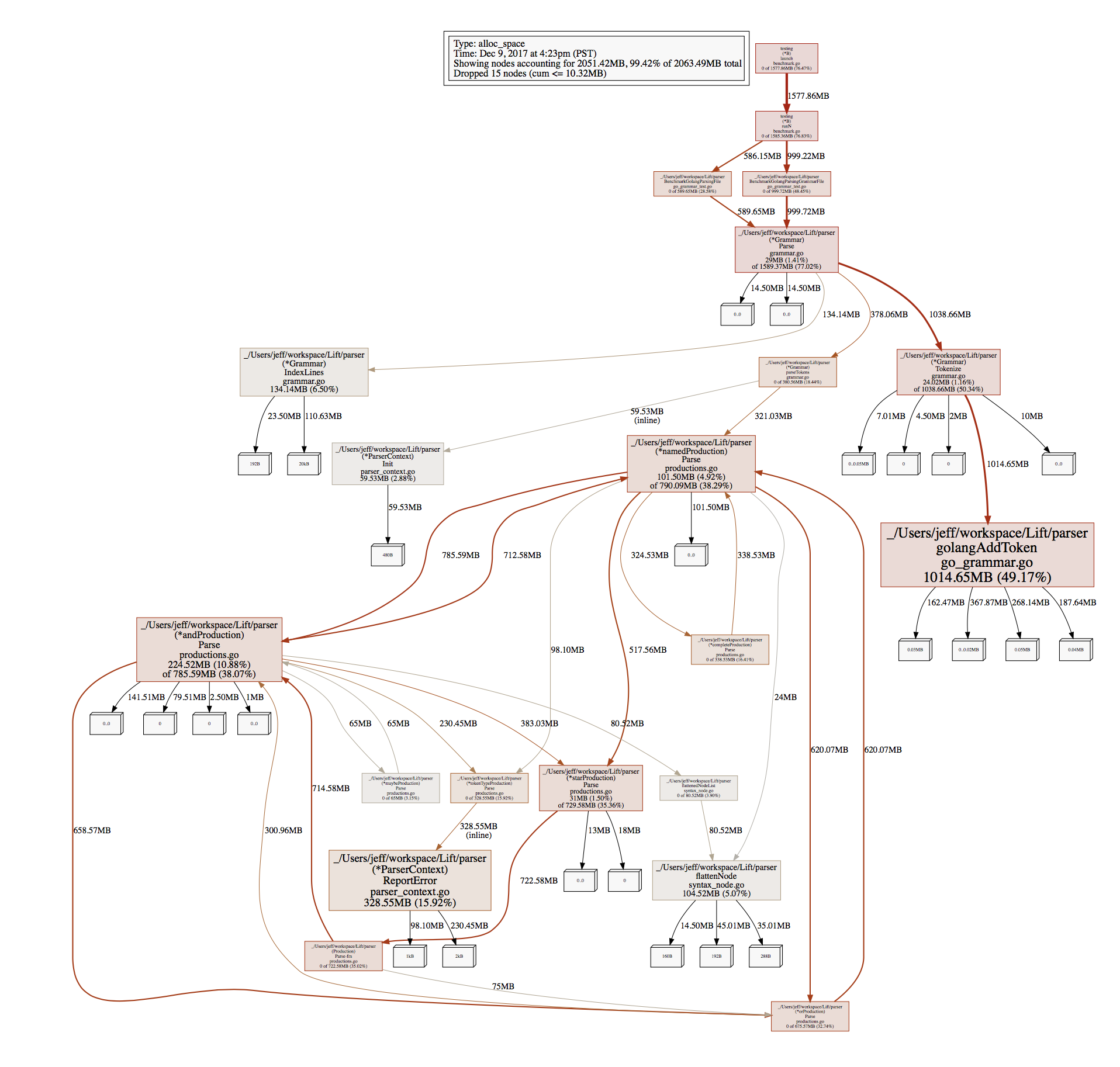

- Error reporting and failure recovery can be upwards of 30% of the cost in my parser. I do want meaningful error messages, but I also don’t want to pay the performance cost of tracking enough information to reproduce those error messages.

It would be great if there was a generalized AST walker for common compiler behaviors (eg - type checking), but it seems like once the AST is created, most of the generalizability is lost until another abstract tree is derived from it (like the LLVM AST).

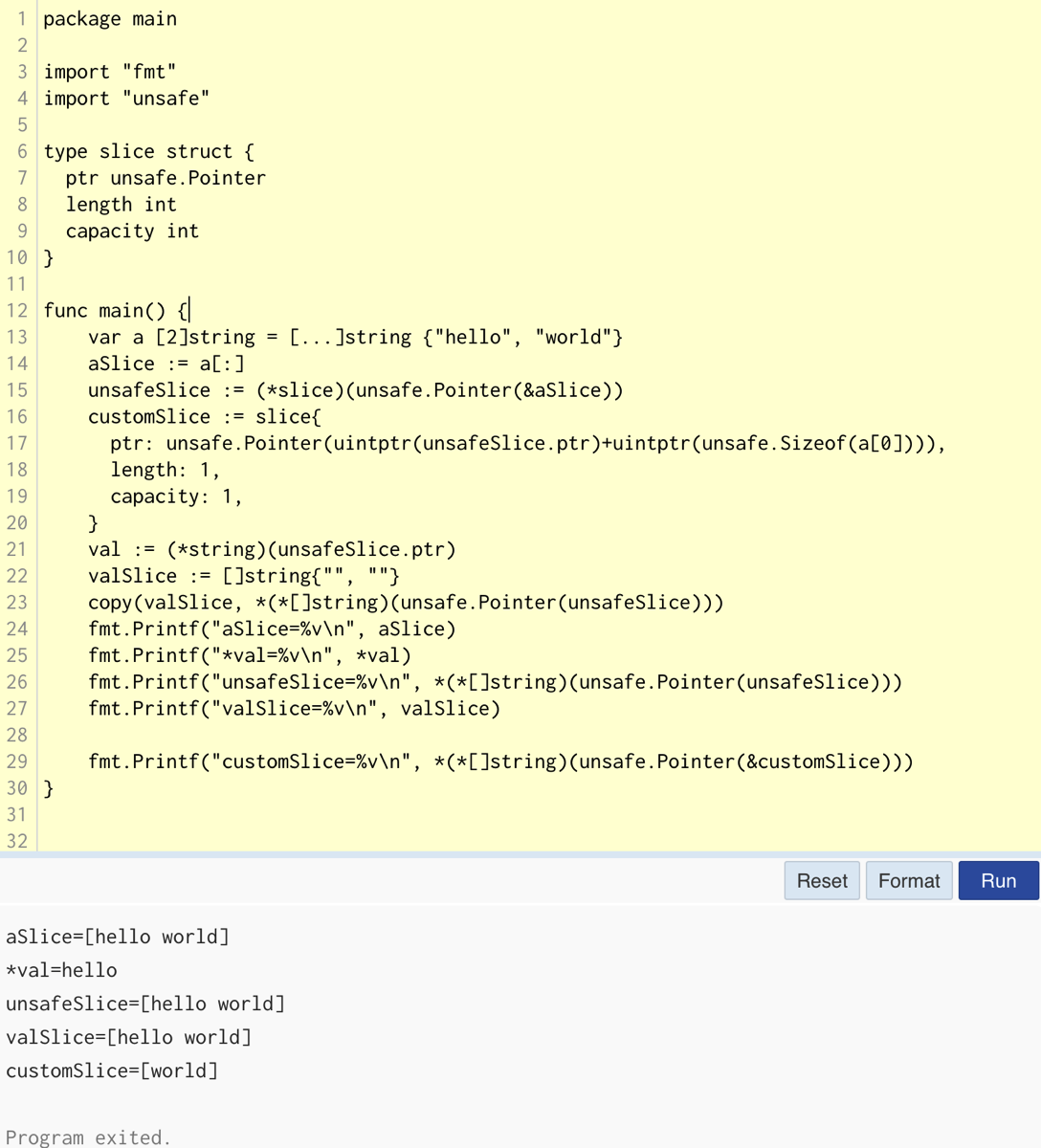

All the optimization needs to be a proper blog post, but here’s a log of various optimization attempts:

Stack-based no pointers:

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.’ -run=‘^$’ . ./assert ./byteconv ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 50000 38515 ns/op 28288 B/op 215 allocs/op

BenchmarkGolangParsingGrammarFile-8 500 4124223 ns/op 1104208 B/op 23185 allocs/op

BenchmarkTransformingLisp-8 1000000 1151 ns/op 440 B/op 23 allocs/op

PASS

ok _/Users/jeff/workspace/Lift/parser 5.941s

Pointer-based no optimizations:

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.’ -run=‘^$’ . ./assert ./byteconv ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 20000 59981 ns/op 45984 B/op 991 allocs/op

BenchmarkGolangParsingGrammarFile-8 200 6272572 ns/op 3201888 B/op 111969 allocs/op

BenchmarkTransformingLisp-8 2000000 892 ns/op 184 B/op 16 allocs/op

Pointer-based with pools:

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.’ -run=‘^$’ . ./assert ./byteconv ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 10000 133741 ns/op 33893 B/op 528 allocs/op

BenchmarkGolangParsingGrammarFile-8 100 14941902 ns/op 1645023 B/op 60928 allocs/op

BenchmarkTransformingLisp-8 2000000 898 ns/op 184 B/op 16 allocs/op

Stack-based with no return value (write to shared state):

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.’ -run=‘^$’ . ./assert ./byteconv ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 30000 40670 ns/op 28304 B/op 215 allocs/op

BenchmarkGolangParsingGrammarFile-8 300 4001636 ns/op 1112160 B/op 23356 allocs/op

BenchmarkTransformingLisp-8 1000000 1158 ns/op 440 B/op 23 allocs/op

” with ParserContext field reordering:

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.’ -run=‘^$’ . ./assert ./byteconv ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 30000 40436 ns/op 28304 B/op 215 allocs/op

BenchmarkGolangParsingGrammarFile-8 500 4000211 ns/op 1112160 B/op 23356 allocs/op

BenchmarkTransformingLisp-8 1000000 1142 ns/op 440 B/op 23 allocs/op

PASS

ok _/Users/jeff/workspace/Lift/parser 5.197s

Stack-based with ParserContext field reordering, but restored return value:

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.’ -run=‘^$’ . ./assert ./byteconv ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 30000 40384 ns/op 28272 B/op 215 allocs/op

BenchmarkGolangParsingGrammarFile-8 500 3934413 ns/op 1104192 B/op 23185 allocs/op

BenchmarkTransformingLisp-8 1000000 1158 ns/op 440 B/op 23 allocs/op

Stack-based, with reordered fields, and reuse []SyntaxNode (custom pool):

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.’ -run=‘^$’ . ./assert ./byteconv ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 50000 38947 ns/op 28192 B/op 152 allocs/op

BenchmarkGolangParsingGrammarFile-8 500 3904994 ns/op 1158586 B/op 17155 allocs/op

BenchmarkTransformingLisp-8 1000000 1166 ns/op 440 B/op 23 allocs/op

Prev + More field reordering:

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.’ -run=‘^$’ . ./assert ./byteconv ./cmd/goparse ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 50000 33827 ns/op 12672 B/op 143 allocs/op

BenchmarkGolangParsingGrammarFile-8 500 3767218 ns/op 1110288 B/op 16975 allocs/op

BenchmarkTransformingLisp-8 1000000 1148 ns/op 440 B/op 23 allocs/op

Prev + prealloc + reused SyntaxNode.Children as LexPos:

Lift · (master) ★ ⟩ make bench ~/w/Lift

go test -benchmem -bench=‘Benchmark.*’ -run=‘^$’ . ./assert ./byteconv ./cmd/goparse ./cmd/lift ./parser

goos: darwin

goarch: amd64

BenchmarkGolangParsingFile-8 50000 32636 ns/op 10880 B/op 144 allocs/op

BenchmarkGolangParsingGrammarFile-8 500 3846066 ns/op 1070352 B/op 17695 allocs/op

BenchmarkTransformingLisp-8 1000000 1135 ns/op 440 B/op 23 allocs/op